Reverse Pareto Principle - Why AI will never reach 100%

Many of the most buzzy use-cases for Generative AI are doomed, and the people working on those projects don’t yet know it. Here’s why:

The Problem

The Pareto Principle, otherwise known as the 80/20 rule, states that about 80% of the outcome comes from 20% of the input. It’s an observed behavior of many complex systems.

The Pareto Principle is often used optimistically in business. That in general, if you can figure out the right 20% of effort, then you can leverage 80% of the outcome. Tech startups leverage some of this thinking in telling the story of how a small team, working on the right problem, can have a large impact.

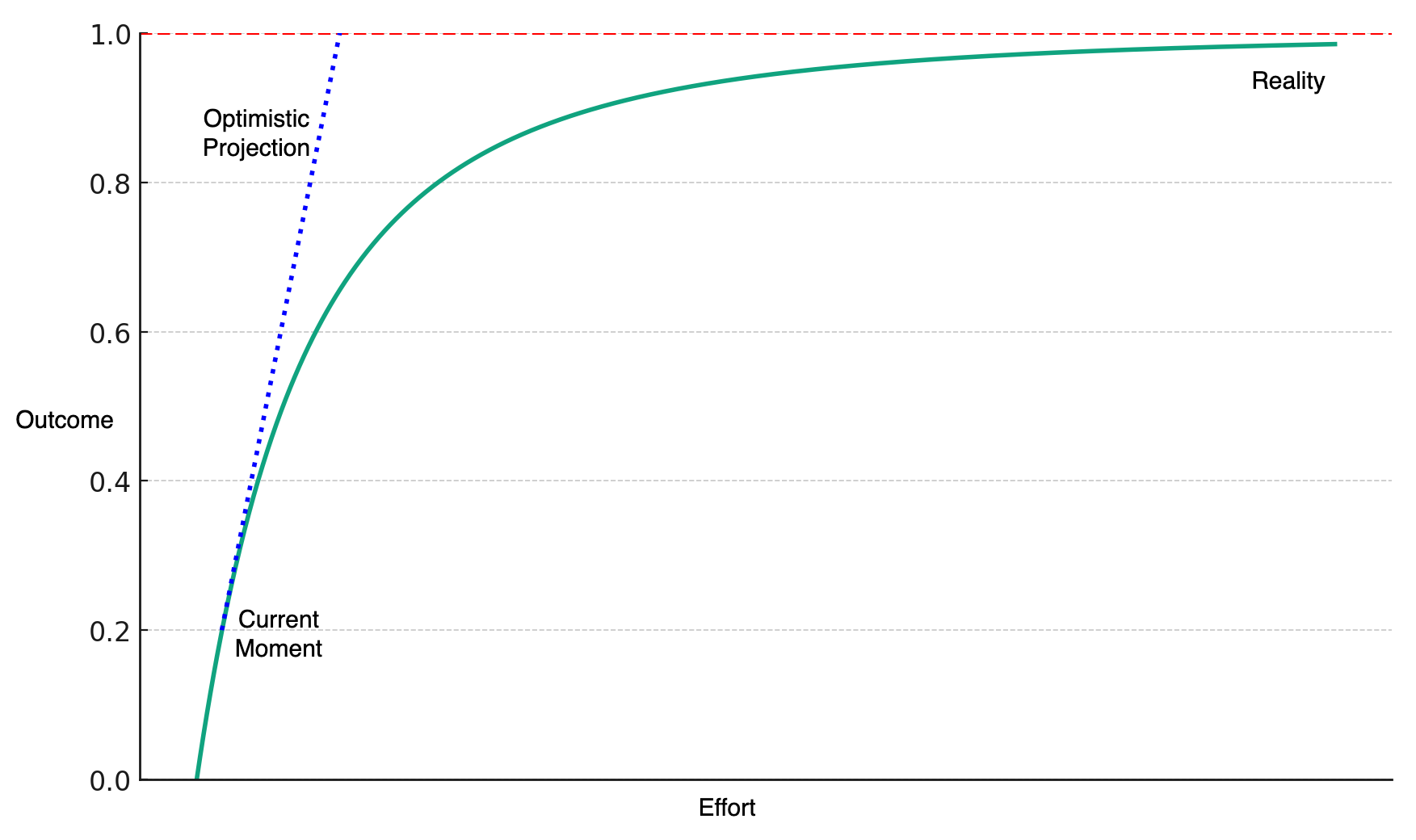

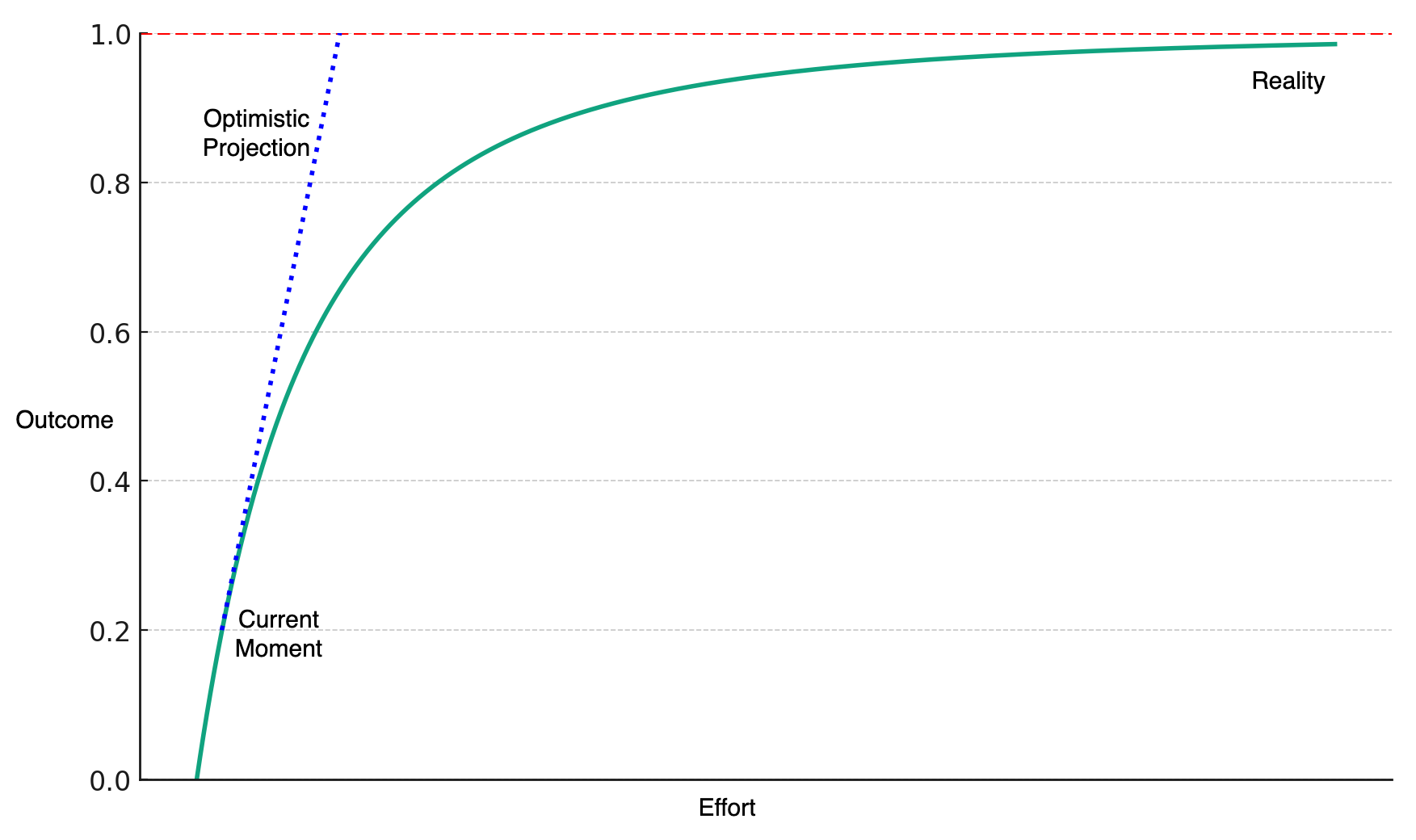

The problem is the Reverse Pareto Principle: that the remaining 20% of the outcome will take an extra 80% of the effort. In fact, it’s even worse than that. The actual statistical Pareto Distribution never actually gets to 100%:

A lot of exciting progress has been made in Generative AI in a very short amount of time. Chatbots can draft content that looks better than what most people can write. And AI can create images that look realistic and better than what most people can draw.

Inspired by this new technology, many people are investing time and money based on optimistic projections of the current progress. They are betting that based on the current rate of progress, more resources will get them to 100% faster than others, and they can own a whole new market.

The problem is that those optimistic projections will not match reality:

The “devil is in the details”. There will be more and more hard problems that take an increasing effort to move the needle the next 1%. In Generative AI, we’re already seeing concerns with hallucinations, unreliable outputs, model safety and security, and challenges in performance measurement. The issue is that even after solving these, there will be more problems to discover and solve.

Strategic Solution

For those who want results on a realistic timeline, from my experience in the last AI hype cycle, the solution is to “move the goalpost and claim victory”.

Photo by Adam Winger on Unsplash

Look for use-cases that do not require that the model be correct 100% of the time. But instead where being correct 80% of the time is useful. For example:

- Instead of an AI professional, make a copilot/assistant that helps those professionals be more efficient. Trying to make an “AI doctor” who makes life-and-death decisions may not have a great return on investment.

- Instead of trying to replace customer service people with AI, use AI to make your service people more productive.

- Instead of trying to make an AI that knows everything, make tools that help knowledge-seekers.

- Instead of a fully self-driving car, deliver a working driver assistance system

When I was at Topaz Labs I saw first-hand the importance of picking the right use-case. The hype at the time was for using AI for image recognition, presumably to then automate decisions based on images. Topaz instead focused on using AI for image enhancement. Packaged as a tool for people to use, people could be delighted when the AI produced a great result, but also painlessly reject results that were not so great.

At Retina AI we predicted consumer buying behavior via Customer Lifetime Value (CLV). The Retina model predicts future purchases and churn date with 90-95% accuracy. While predictive CLV has many use-cases, we found success with optimizing marketing campaigns. In part, this is because marketing is inherently about changing probabilities, and being right 95% of the time can be quite profitable.

A successful early use-case for AI will have a low cost for being wrong. In such a use-case, even if the AI is great only 80% of the time, it can still deliver value. When instead there is a high cost for being wrong, the AI project is setup for failure. It will need high accuracy and reliability: a goal which starts out seeming reasonable, but ends up in a project death march.

Caveat Emptor - Note that eventually, with enough effort, new technologies do become reliable enough. For example, airplanes eventually became commercially viable, it just wasn’t the Wright Brothers who benefitted.